Supervised vs. unsupervised learning: Experts define the gap

Learn the characteristics of supervised learning, unsupervised learning and semisupervised learning and how they're applied in machine learning projects.

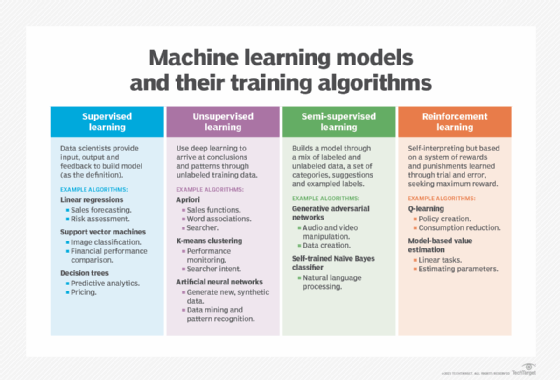

Supervised learning tends to get the most publicity in discussions of artificial intelligence techniques since it's often the last step used to create the AI models for things like image recognition, better predictions, product recommendation and lead scoring.

In contrast, unsupervised learning tends to work behind the scenes earlier in the AI development lifecycle: It is often used to set the stage for the supervised learning's magic to unfold, much like the grunt work that enablesa manager to shine. Both modes of machine learning are usefully applied to business problems, as explained later.

On a technical level, the difference between supervised vs. unsupervised learning centers on whether the raw data used to create algorithms has been pre-labeled (supervised learning) or not pre-labeled (unsupervised learning).

Let's dive in.

What is supervised learning?

In supervised learning, data scientists feed algorithms with labeled training data and define the variables they want the algorithm to assess for correlations.

Both the input data and the output variables of the algorithm are specified in the training data. For example, if you are trying to train an algorithm to know if a picture has a cat in it using supervised learning, you would create a label for each picture used in the training data indicating whether the image does or does not contain a cat.

As explained in our definition of supervised learning: "[A] computer algorithm is trained on input data that has been labeled for a particular output. The model is trained until it can detect the underlying patterns and relationships between the input data and the output labels, enabling it to yield accurate labeling results when presented with never-before-seen data." Common types of supervised algorithms include classification, decision trees, regression and predictive modeling, which you can learn about in this tutorial on machine learning from Arcitura Education.

Supervised machine learning techniques are used in a variety of business applications -- see Figure 1 -- including the following:

- Personalized marketing.

- Insurance/credit underwriting decisions.

- Fraud detection.

- Spam filtering.

What is unsupervised learning?

In unsupervised learning, an algorithm suited to this approach -- K-means clustering is an example -- is trained on unlabeled data. It scans through data sets looking for any meaningful connection. In other words, unsupervised learning determines the patterns and similarities within the data, as opposed to relating it to some external measurement.

This approach is useful when you don't know what you're looking for and less useful when you do. If you showed the unsupervised algorithm many thousands or millions of pictures, it might come to categorize a subset of the pictures as images of what humans would recognize as felines. In contrast, a supervised algorithm trained on labeled data of cats versus canines is able to identify images of cats with a high degree of confidence. But there is a tradeoff with this approach: If the supervised learning project takes millions of labeled images to develop the model, the machine-generated prediction requires a lot of human effort.

There is a middle ground: semisupervised learning.

Aaron Kalb

Aaron Kalb

What is semisupervised learning?

Semisupervised learning is a sort of shortcut that combines both approaches. Semisupervised learning describes a specific workflow in which unsupervised learning algorithms are used to automatically generate labels, which can be fed into supervised learning algorithms. In this approach, humans manually label some images, unsupervised learning guesses the labels for others, and then all these labels and images are fed to supervised learning algorithms to create an AI model.

Semisupervised learning can lower the cost of labeling the large data sets used in machine learning. "If you can get humans to label 0.01% of your millions of samples, then the computer can leverage those labels to significantly increase its predictive accuracy," said Aaron Kalb, co-founder and chief innovation officer of Alation, an enterprise data catalog platform.

What is reinforcement learning?

Another machine learning approach is reinforcement learning. Typically used to teach a machine to complete a sequence of steps, reinforcement learning is different from both supervised and unsupervised learning. Data scientists program an algorithm to perform a task, giving it positive or negative cues, or reinforcement, as it works out how to do the task. The programmer sets the rules for the rewards but leaves it to the algorithm to decide on its own what steps it needs to take to maximize the reward -- and, therefore, complete the task.

Shivani Rao

Shivani Rao

When should supervised learning vs. unsupervised learning be used?

Shivani Rao, manager of machine learning at LinkedIn, said the best practices for adopting a supervised or unsupervised machine learning approach are often dictated by the circumstances, assumptions you can make about the data and application.

The choice of using supervised learning versus unsupervised machine learning algorithms can also change over time, Rao said. In the early stages of the model building process, data is commonly unlabeled, while labeled data can be expected in the later stages of modeling.

For example, for a problem that predicts if a LinkedIn member will watch a course video, the first model is based on an unsupervised technique. Once these recommendations are served, a metric recording whether someone clicks on the recommendation provides new data to generate a label.

LinkedIn has also used this technique for tagging online courses with skills that a student might want to acquire. Human labelers, such as an author, publisher or student, can provide a precise and accurate list of skills that the course teaches, but it is not possible for them to provide an exhaustive list of such skills. Hence, this data can be thought of as incompletely tagged. These types of problems can use semisupervised techniques to help build a more exhaustive set of tags.

Bharath Thota

Bharath Thota

Data science and advanced analytics expert Bharath Thota, partner at consulting firm Kearney, said that practical considerations also tend to govern his team's choice of using supervised or unsupervised learning.

"We choose supervised learning for applications when labeled data is available and the goal is to predict or classify future observations," Thota said. "We use unsupervised learning when labeled data is not available and the goal is to build strategies by identifying patterns or segments from the data."

Alation data scientists use unsupervised learning internally for a variety of applications, Kalb said. For example, they have developed a human-computer collaboration process for translating arcane data object names into human language, e.g., "na_gr_rvnu_ps" into "North American Gross Revenue from Professional Services." In this case, the machines guess, humans confirm and machines learn.

"You could think of it as semisupervised learning in an iterative loop, creating a virtuous cycle of increased accuracy," Kalb said.

5 unsupervised learning techniques

At a high level, supervised learning techniques tend to focus on either linear regression (fitting a model to a collection of data points for prediction) or classification problems (does an image have a cat or not?).

Unsupervised learning techniques often complement the work of supervised learning using a variety of ways to slice and dice raw data sets, including the following:

- Data clustering. Data points with similar characteristics are grouped together to help understand and explore data more efficiently. For example, a company might use data clustering methods to segment customers into groups based on their demographics, interests, purchasing behavior and other factors.

- Dimensionality reduction. Each variable in a data set is considered a separate dimension. However, many models work better by analyzing a specific relationship between variables. A simple example of dimensionality reduction is using profit as a single dimension, which represents income minus expenses -- two separate dimensions. However, more sophisticated types of new variables can be generated using algorithms such as principal component analysis, autoencoders, algorithms that convert text into vectors or T-distributed Stochastic Neighbor Embedding.

Dimensionality reduction can help reduce the curse of overfitting, in which a model works well for a small data set but does not generalize well to new data. This technique also enables companies to visualize high-dimensional data in 2D or 3D, which humans can easily understand. - Anomaly or outlier detection. Unsupervised learning can help identify data points that fall out of the regular data distribution. Identifying and removing the anomalies as a data preparation step may improve the performance of machine learning models.

- Transfer learning. These algorithms utilize a model that was trained on a related but different task. For example, transfer learning techniques would make it easy to fine-tune a classifier trained on Wikipedia articles to tag arbitrary new types of text with the right topics. LinkedIn's Rao said this is one of most efficient and quickest ways to solve a data problem where there are no labels.

- Graph-based algorithms. These techniques attempt to build a graph that captures the relationship between the data points, Rao said. For example, if each data point represents a LinkedIn member with skills, then a graph can be used to represent members, where the edge indicates the skill overlap between members. Graph algorithms can also help transfer labels from known data points to unknown but strongly related data points. Unsupervised learning can also be used for building a graph between entities of different types (the source and the target). The stronger the edge, the higher the affinity of the source node to the target node. For example, LinkedIn has used them to match members with courses based on skills.

Supervised vs. unsupervised learning in finance

Tom Shea, CEO of OneStream Software, a corporate performance management platform, said supervised learning is often used in finance for building highly precise models, whereas unsupervised techniques are better suited for back-of-the-envelope types of tasks.

In supervised learning projects, data scientists work with finance teams to utilize their domain expertise on key products, pricing and competitive insights as a critical element for demand forecasting. The domain expertise is particularly germane in more granular levels of forecasting needs where every region, product and even SKU have unique experiences and require intuition. These types of models derived from supervised learning can help to improve forecast accuracy and resulting inventory holding metrics.

Shea sees unsupervised learning being used to improve regional or divisional management jobs that don't require the direct domain knowledge of supervised learning. For example, unsupervised learning could help identify the normal rate of spending among a group of related items and the outliers. This is particularly useful in analyzing large transactional data sets -- e.g., orders, expenses and invoicing -- as well helping increase accuracy during the financial close processes.